Mon-Fri, 9:00-17:00 (Beijing Time, UTC+8)

Mon-Fri, 9:00-17:00 (Beijing Time, UTC+8)Frontier Insights

We are dedicated to advancing the technology industry and sharing expertise in technical, business, and cultural domains.

We are dedicated to advancing the technology industry and sharing expertise in technical, business, and cultural domains.

Ultimate Guide to Sitemaps in 2025: From "Manual Maintenance" to "Intelligent Automation"

Publication date: January 4, 2026

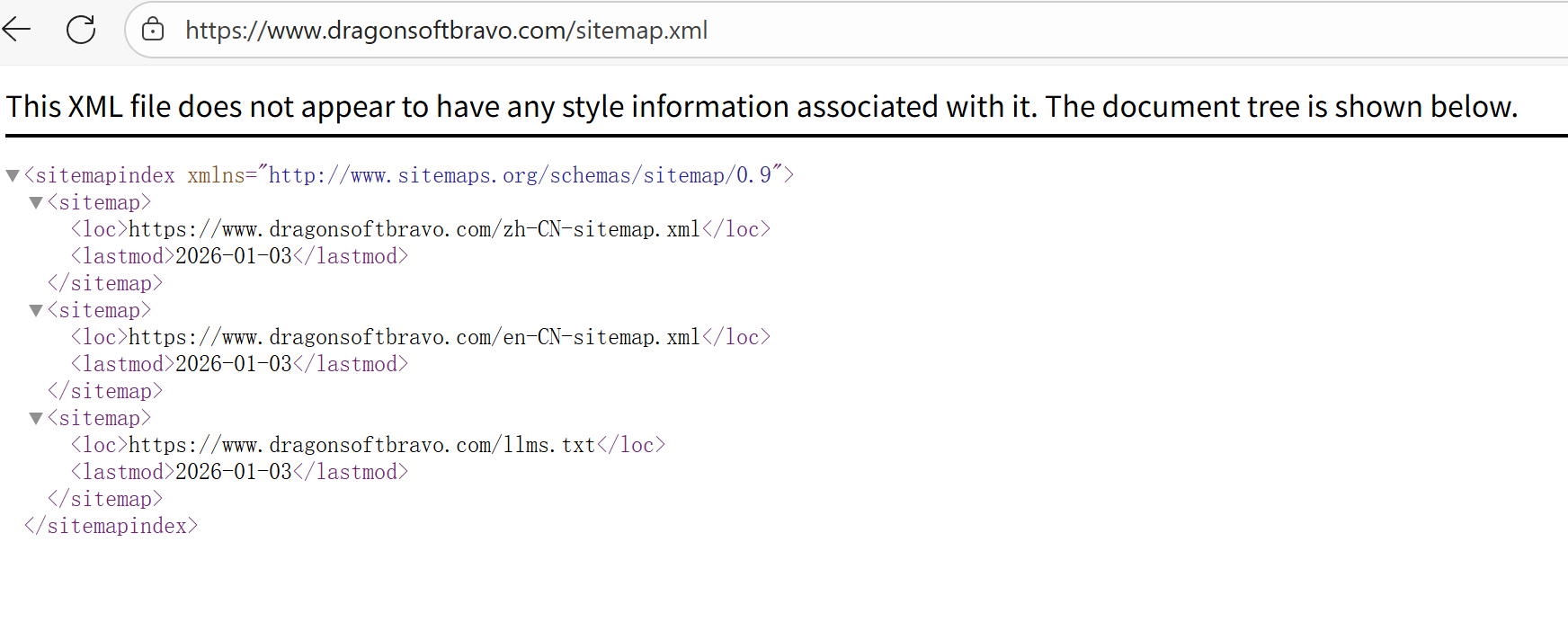

After reading the "Ultimate Guide to sitemaps in 2025", we now understand: in today's technical SEO (Technical SEO), a sitemap is more than just a list of URLs—it is an extensive ecosystem of tools.

As outlined in relevant technical guidelines, standard XML sitemaps, image sitemaps, video sitemaps, and sitemap Index files for large-scale sites form the foundation for search engines to understand your website, aibase.dev. However, with websites growing exponentially and the dawn of the AI search era, merely knowing "what a sitemap is" is no longer sufficient. The real challenge enterprises face is: how to efficiently generate and maintain them?

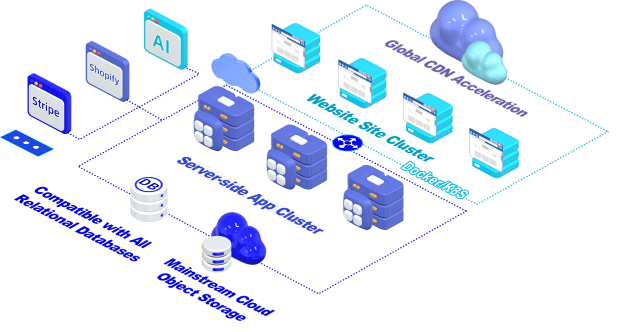

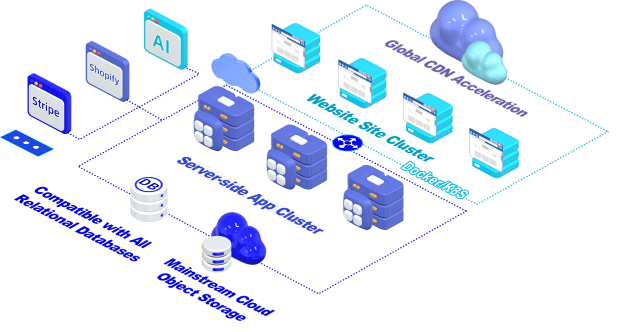

This is exactly where BMS Digital Experience Platform comes in. Its powerful Experience Manager transforms the tedious task of sitemap maintenance into a fully automated backend process, effortlessly managing even millions of pages.

Three Major Pain Points of Traditional Sitemap Maintenance

Without strong CMS support, SEO and development teams often fall into the following traps:

1. Large Site Indexing Difficulties

Google and other search engines impose strict limits on individual sitemap files (typically 50,000 URLs or 50MB in size). When a website exceeds this number, sitemap Index files must be manually created and split into multiple sub-files.

2. Content Sync Delays

Marketing teams publish new campaign pages or update product documentation, but the sitemap remains unchanged. Search engine crawlers fail to discover new content promptly, causing indexing delays and wasting valuable marketing windows.

3. Falling Behind in the AI Era

In 2025, it's not only Google that needs to read your site—AI Agents like ChatGPT, Claude, and Perplexity do too. Traditional XML Sitemaps cannot effectively meet the efficient crawling demands of LLMs (Large Language Models).

The BMS DXP Experience Manager perfectly solves these issues, achieving three major breakthroughs:

1. Breaking Limits: Automatic Sitemap Tiering and Indexing

For large enterprise websites, e-commerce platforms, or sites with vast numbers of blog posts, a single sitemap file has long been inadequate.

BMS DXP Solution:

BMS DXP intelligently detects your site’s scale. When your content count exceeds search engine thresholds (e.g., 50,000 items), the system automatically applies a tiering strategy:

Automatic Index File Generation

BMS DXP automatically generates a parent sitemap_index.xml file.

Smart Splitting

The system automatically divides massive URLs into sub-files such as sitemap-blog.xml, sitemap-products-01.xml based on content type (e.g., blog, product, landing page) or publication date.

You don’t need to write any scripts or manually split files. No matter how large your site grows, BMS ensures submitted files comply with standards, avoiding crawl errors due to oversized files.

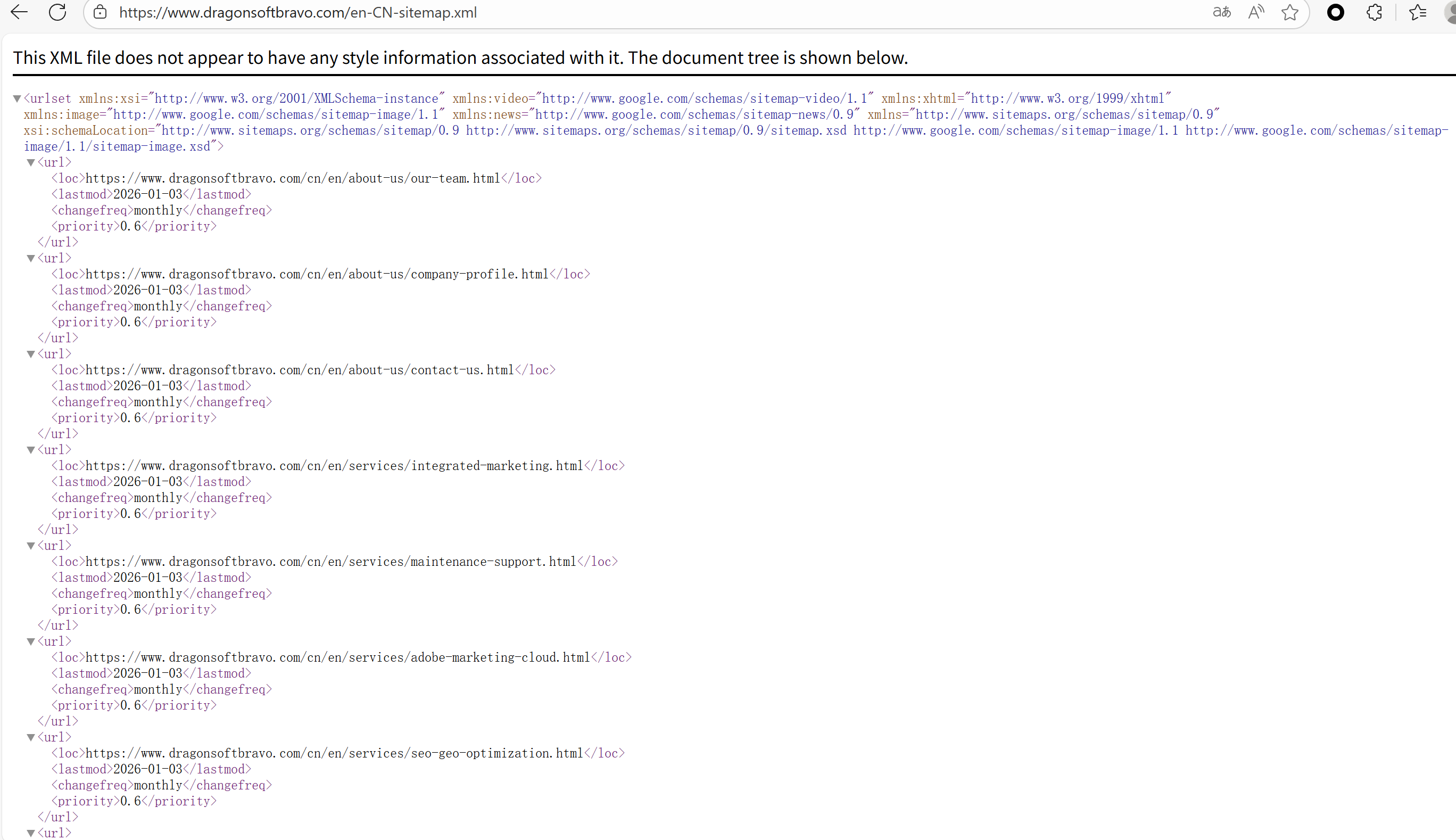

2. Defining "Real-Time": Sitemap Auto-Update

In traditional CMS or static site generators, updating a sitemap often requires rebuilding the entire project or relying on scheduled tasks (Cron jobs). This creates a time lag between "content publishing" and "sitemap updates".

BMS DXP Solution:

BMS DXP implements an event-driven update mechanism.

Instant Synchronization

When marketers click the "Publish" or "Edit" button in the backend, BMS DXP instantly injects the newly generated URL into the corresponding sitemap file and updates the <lastmod> timestamp.

Dead Link Cleanup

When you de-list a product or article, BMS DXP automatically removes it from the sitemap, preventing search engines from crawling 404 pages and protecting overall site authority.

This automation ensures that change frequency and priority data remain up-to-date, effectively signaling search engine crawlers to revisit and re-crawl.

3. Future-Proofing: Automatic Generation of llms.txt (AI-Ready Infrastructure)

This is the most critical SEO trend for 2025 and beyond.

Traditional robots.txt and sitemap.xml were designed for search engine crawlers. In the age of AI search, AI bots like OpenAI’s GPTbot and Anthropic’s ClaudeBot require more efficient, structured ways to understand your website content so they can accurately cite your brand information in AI-generated responses. This has led to the emergence of the llms.txt standard.

BMS DXP Solution:

BMS DXP is among the first to support automatic generation of llms.txt, helping businesses gain early advantage through AI Search Optimization (AISO):

Optimized for Machine Reading

BMS DXP can automatically extract Markdown summaries or plain text versions of core website content and integrate them into llms.txt.

Curated Context

Not all web pages are suitable for feeding to AI. BMS DXP allows you to specify which high-value content (e.g., core product descriptions, whitepapers, help documentation) should be included in llms.txt, ensuring large models learn the most accurate and high-quality information about your business.

Automated Maintenance

Just like XML Sitemaps, llms.txt updates automatically as content changes, ensuring AI Agents always access current information.

Summary: Make Technology Invisible, Make Marketing Visible

As advocated by modern CMS platforms like Adobe AEM / brightspot, content management system has evolved from simple publishing tools into digital experience platforms.

With BMS Digital Experience Platform ( BMS DXP ), you no longer need to worry about XML syntax, file size limits, or AI crawling protocols.

1. Automatic Sitemap Tiering: Solves indexing challenges for large sites.

2. Automatic Sitemap Updates: Eliminates indexing delays.

3. Automatic llms.txt Generation: Secures early access to AI traffic.

Reduce the time cost caused by technical complexity to zero, allowing marketing teams to focus on creating content while BMS DXP ensures the world—both humans and AI—can see it.

⭐ Learn more about BMS DXP Experience Manager capabilities: BMS Digital Experience Platform- Experience Manager

FAQ

1. Why do large websites need Automatic Tiering for their sitemaps?

For large websites with various product pages or massive amounts of articles, search engines like Google have strict limits on a single XML Sitemap file (typically 50,000 URLs or 50MB). If this limit is exceeded and not split, the search engine will be unable to crawl the excess pages.

BMS DXP (BMS Digital Experience Platform)solves this problem through automatic tying technology. When the content volume exceeds the limit, BMS DXP automatically generates a parent sitemap_index.xml file and intelligently splits the URLs into multiple sub-files (such as by date or category), ensuring that all pages can be indexed by search engines without manual intervention.

2. How does BMS DXP ensure that sitemap updates are even real-time?

Traditional CMS often rely on scheduled tasks (such as once a day) to rebuild the sitemap, which results in a "time lag" between content publishing and crawling.

BMS DXP Experience Manager uses an event-driven mechanism. Whenever you publish new content, update an existing page, or remove an old article, the system writes the changes to the sitemap in real time and updates the `<lastmod>` timestamp. This allows Googlebot to detect content changes immediately, thereby improving indexing speed and automatically removing dead links (404 errors), protecting website authority.

3. What is llms.txt, and why does SEO need it in 2025?

llms.txt is the new standard for the AI search era, hailed as the "AI robot version of Robots.txt." This is crucial for AI agents like ChatGPT, Claude, and Perplexity to crawl your website.

Traditional sitemaps are for search engine crawlers, while llms.txt helps Large Language Models (LLMs) understand your website structure and core content more clearly and accurately. By automatically generating llms.txt through BMS DXP , you can proactively feed optimized content summaries to AI, ensuring your brand information is accurately referenced in AI-generated answers, seizing future traffic entry points.

4. Can BMS DXP manage both XML Sitemap and AI search index files simultaneously?

Yes, this is one of the core advantages of BMS Experience Manager. You don't need to install multiple plugins or write complex scripts. As an all-in-one platform, BMS DXP can automatically maintain both the traditional sitemap.xml (for Google/Bing ranking) and the emerging llms.txt (for AI search visibility) in the background. Regardless of how technology standards evolve, BMS DXP ensures your content infrastructure is always in optimal condition.

5. Does using BMS DXP to manage sitemaps directly help SEO rankings?

It has significant indirect benefits. While the sitemap itself is not a direct factor in ranking, efficient crawling is fundamental.

The automated management provided by BMS DXP enables:

1. Maximizing crawling budget: Preventing crawlers from wasting resources on invalid pages through real-time updates and dead link cleanup.

2. Accelerating new page indexing: Instantly notifying search engines of the presence of new content.

3. Increase AI citation rate: Increase exposure in AI search results (SGE) by using llms.txt.

Want to know more about our products?

With years serving Fortune 500 clients, we offer flexible solutions and integrated implementation.

Want to know more about our products?

Xiaohongshu

WeChat Channels

Douyin

Xiaohongshu

WeChat Channels

Douyin

To enhance your browsing experience, analyze website traffic, and optimize our services, we use cookies. By continuing to browse this website, you agree to our use of cookies. For more information, please read our Privacy Policy and Terms of Use.

To enhance your browsing experience, analyze website traffic, and optimize our services, we use cookies. By continuing to browse this website, you agree to our use of cookies. For more information, please read our Privacy Policy and Terms of Use.